The Release That Quietly Reset Expectations

On 13 June 2025, while CVPR attendees were still comparing poster sessions, Tencent dropped a GitHub commit titled simply “Release Hunyuan3D‑2.1.” Beneath that bland heading lay the first fully open‑source, production‑ready 3D generative model to ship with complete weights, training code, and a PBR‑aware texture pipeline.

Within forty‑eight hours the repository exceeded 700 stars, Product Hunt vaulted it into the day’s top launches, and Hugging Face downloads sped past 1.8 million—numbers unheard‑of for a model that still requires a hefty GPU.

Why Version 2.1 Is More Than a Point Release

Tencent had already impressed with Hunyuan 3D 2.0 in January, but that build shipped only inference checkpoints. Version 2.1 arrives with two headline upgrades:

- A fully open methodology. Researchers can inspect every line of training code, reproduce the data pipeline, or fine‑tune on proprietary datasets.

- Physically‑Based Rendering (PBR) textures by default. The new pipeline simulates metallic reflection, subsurface scattering, and anisotropic fabrics, erasing the tell‑tale “plastic” sheen common in AI meshes.

Blind tests conducted by Tencent show a 78 percent user preference for Hunyuan 3D 2.1 textures over previous models—an eye‑popping win rate in an arena where single‑digit gains are celebrated.

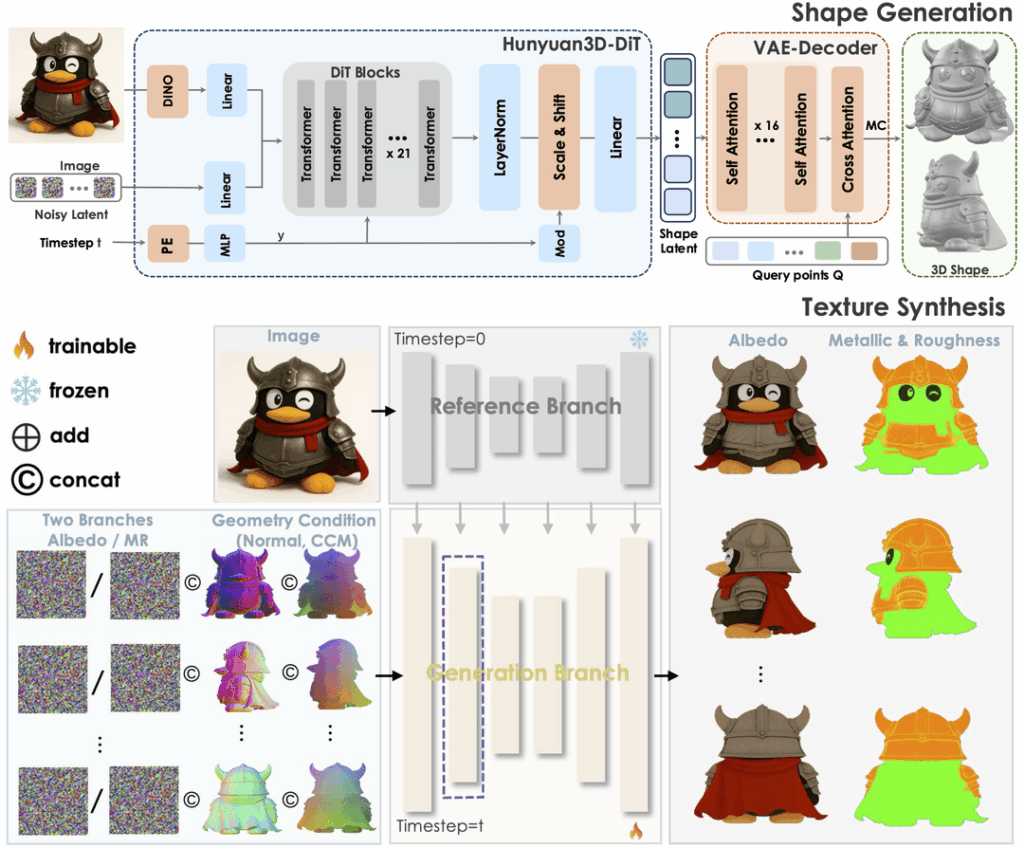

A Technical Deep Dive for Practitioners

| Feature | Detail | Source |

|---|---|---|

| Architecture | Flow‑based diffusion transformer (DiT) for shape; paired “Paint” network for textures | (github.com) |

| Model size | 3.3 B (shape) + 2 B (texture) parameters | (github.com) |

| VRAM footprint | 10 GB (shape), 21 GB (texture), 29 GB end‑to‑end | (github.com) |

| Operating systems | Windows, macOS, Linux | (github.com) |

| Benchmarks | Out‑performs seven leading open‑ and closed‑source baselines on ULIP, CLIP, and LPIPS metrics | (github.com) |

Those numbers carry consequences. A single RTX 4090 can now crank out showroom‑ready assets—no multi‑GPU cluster required—while studios with A100s or H100s can batch generate entire prop libraries overnight.

From Single Image to Marketplace‑Ready Asset

The two‑stage workflow is both intuitive and flexible:

- Shape Generation (

hy3dshape). Feed a reference image; the DiT network predicts a watertight mesh aligned to the picture plane. - Texture Painting (

hy3dpaint). The PBR module bakes albedo, normal, roughness, metallic, and displacement maps in one pass, calibrated for Blender, Unreal, or Unity.

Tencent’s README even ships a Blender add‑on and a one‑line Gradio demo, letting newcomers test drive without compiling C++.

Open Source as Strategy, Not Charity

Why would a tech giant give away cinema‑grade IP? The answer lies in ecosystem gravity. By making Hunyuan 3D the reference stack for asset generation, Tencent strengthens its position across gaming (TiMi Studios), social (QQ/Xianyu’s AR try‑ons), and the cloud GPU market it quietly rents to third parties.

Moreover, the pattern echoes the LLM race: open models become the substrate for rapid community innovation, which in turn cements platform relevance. In an era when Unreal’s MetaHuman and Adobe’s Substance set the bar for fidelity, Tencent just offered a free alternative—complete with source code.

Competitive Landscape

| Model | License | PBR Support | VRAM ≤ 24 GB | Training Code |

|---|---|---|---|---|

| Hunyuan 3D 2.1 | MIT | Yes | ✔ | ✔ |

| Stable DreamFusion (OpenAI) | Non‑commercial | No | ✘ | ✘ |

| LumaDreamer v1 | BSD | Rough only | ✔ | ✔ |

| TripoSG | MIT | No | ✔ | ✔ |

Early Industry Adoption Stories

- Mobile game studios have begun swapping concept sculpts for instant meshes, slashing grey‑box phases from weeks to days.

- E‑commerce retailers report 3× faster SKU digitisation for AR product viewers, thanks to the model’s leather‑and‑metal realism.

- Film pre‑visualisation teams feed storyboard frames to Hunyuan 3D, roughing out set pieces before the art department picks up ZBrush.

Each anecdote underscores a broader point: lowering asset cost rewires creative timelines.

Getting Started

git clone https://github.com/Tencent-Hunyuan/Hunyuan3D-2.1

cd Hunyuan3D-2.1

pip install -r requirements.txt

python gradio_app.py --low_vram_modeThat five‑line recipe spins up a browser demo on a consumer‑grade PC. Tencent’s docs then walk you through exporting GLB files, importing to Unreal, and tuning roughness maps for ray‑traced stages.

What This Means for the 3D Content Supply Chain

Hunyuan 3D 2.1 compresses three expensive steps—modeling, UV unwrapping, and material authoring—into a single button press. For indies, it levels the playing field against studios with dedicated art teams. For enterprises, it halves prototype cycles and unlocks mass customisation (think automotive colourways or sneaker drops).

The open‑source license further ensures that academic labs can probe, extend, and critique the system, accelerating progress for everyone.

Final Word

In the long arc from ASCII art to photorealistic generative worlds, Tencent’s Hunyuan 3D 2.1 is a hinge moment. It proves that open code and production quality need not be trade‑offs, and it raises an implicit challenge to every proprietary pipeline still hiding its weights. For creators, the horizon just expanded; for competitors, the bar just moved—again.

Leave a Reply