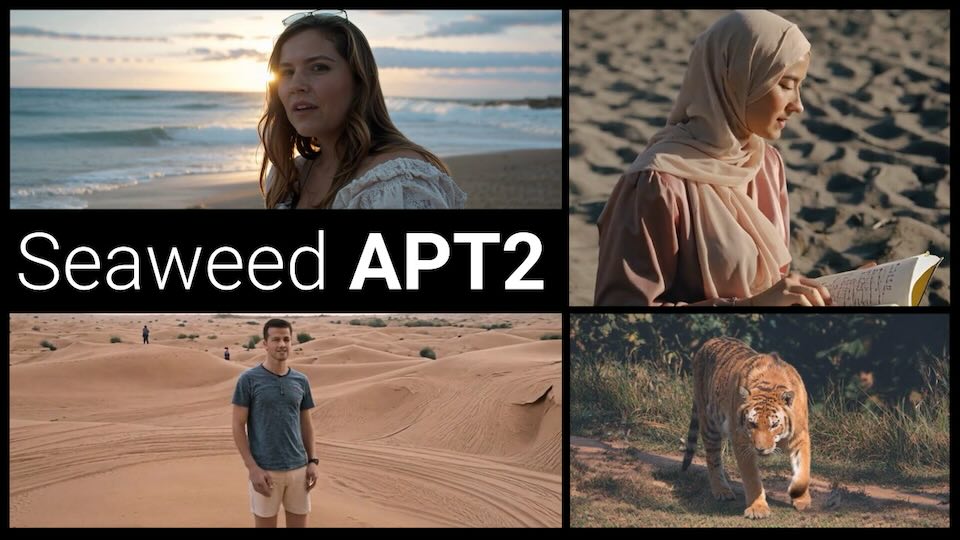

Why Seaweed APT2 Matters

On 13 June 2025, ByteDance quietly lifted the curtain on Seaweed APT2, a research‑grade video model capable of streaming 24‑frame‑per‑second footage while you steer the camera or puppeteer digital actors in the moment. The announcement drew instant reactions across X, LinkedIn, and Reddit, with creators calling it “one step closer to the Holodeck.”

Unlike most text‑to‑video systems, APT2 is designed for real‑time interactivity. You set an initial image or prompt, then guide the scene as it unfolds—no render queues, no stitched segments. The model’s arrival reframes the race to control the next generation of synthetic media, pushing the conversation beyond clip length or photorealism to the far thornier question of latency.

From APT1 to APT2: A Brief Lineage

ByteDance’s “Autoregressive Post‑Training” (APT) series began last winter with APT1, a proof‑of‑concept that could generate 49‑frame GIF‑length videos. APT2 leaps forward on three axes:

| Metric | APT1 | APT2 |

|---|---|---|

| Frames per second | 12 fps | 24 fps |

| Continuous duration (single H100) | 4 s | 60 s+ |

| Latency per latent frame | ~100 ms | ~40 ms |

| User control | None | Pose & camera in real time |

APT2 achieves those figures through a novel autoregressive adversarial objective layered on a standard transformer backbone—an approach the research team calls AAPT (Autoregressive Adversarial Post‑Training).

Under the Hood: Adversarial Autoregression

Traditional diffusion or next‑token methods predict frames step‑by‑step, incurring heavy compute. APT2 instead generates one latent frame—equivalent to four RGB frames—per transformer pass, recycling each result as context for the next step. A paired discriminator scores realism in parallel, tightening temporal coherence without ballooning memory.

The model weighs in at 8 billion parameters, slim by contemporary standards, yet its KV‑cache‑friendly architecture lets it exploit GPU memory like a language model. The result: 24 fps, 736 × 416‑pixel streams on a single NVIDIA H100, or 720p on an eight‑GPU node.

Interactive Modes: From Virtual Humans to World Exploration

APT2 ships with two showcase use cases:

- Virtual‑Human Puppetry — Feed one reference frame to lock identity, then drive body pose in real time; the model renders continuous motion with minimal drift.

- Camera‑Controlled World Exploration — Supply a starter frame of a scene, then move the virtual camera along six degrees of freedom. APT2 hallucinates unseen vistas on the fly, maintaining global lighting and perspective.

In both demos, latency remains low enough that a user with a VR headset or gamepad could feasibly “walk” through an AI‑imagined environment without motion sickness.

Benchmarks: How Fast Is “Real Time”?

- Single‑GPU Throughput — 24 fps at 640 × 480‑equivalent resolution on a lone H100.

- Scaling Efficiency — Linear speed‑ups to 8× H100s yield 720p streams.

- Duration — ByteDance published one‑minute, full‑frame samples and internal tests up to five minutes before minor subject drift emerges.

For context, OpenAI’s Sora teaser reaches similar resolution but takes minutes per clip; Google’s Veo 2 previewed long shots yet still renders offline. APT2 is the first public research artifact to prove live 60‑second generation on commodity cloud hardware.

Strategic Implications for ByteDance

ByteDance now fields a tiered video stack:

| Layer | Model | Purpose |

|---|---|---|

| Foundational | Seaweed‑7B | High‑quality, long‑form text/image‑to‑video |

| Fast Generator | APT1 | One‑shot clips, diffusion baseline |

| Interactive Streamer | APT2 | Real‑time, user‑controlled worlds |

By attacking both fidelity (Seaweed) and latency (APT2), the company positions itself against OpenAI’s Sora, Google’s Veo, and Runway’s Gen‑4 Turbo, each strong in one dimension but not both. Investors already view TikTok as a testbed for consumer AI video; APT2 deepens that moat by promising experiences that cannot be replicated on platforms built for prerecorded clips.

Potential Applications

- Live Storytelling & Streaming — Imagine a Twitch creator improvising scenes mid‑broadcast while viewers vote on plot twists in real time.

- Virtual Production — Directors could scout AI‑generated sets, moving a virtual camera to frame shots before a single stage light is rented.

- Immersive Training — HR teams run dynamic role‑playing exercises (“difficult customer,” “tense negotiation”) that adjust to participant input.

- Assistive Content Creation — Small studios generate placeholder footage on‑the‑fly, replacing it later with final renders only when necessary.

Each use case depends on low-latency feedback loops—precisely APT2’s selling point.

Limitations ByteDance Admits Upfront

- Fast‑Changing Motion — One‑network‑forward evaluation (1NFE) struggles with sudden scene changes.

- Long‑Term Memory — The sliding‑window attention forgets distant frames, causing gradual drift beyond five minutes.

- Physics Consistency — Objects sometimes clip or violate gravity under strenuous camera paths.

- Alignment and Safety — APT2 lacks the human‑preference tuning that shores up diffusion models against unwanted content. seaweed-apt.com

ByteDance’s paper calls APT2 an “early research work,” a reminder that commercialization will require additional guardrails.

The Road Ahead

ByteDance’s roadmap hints at CameraCtrl II for 3D‑aware navigation and SeedVR2 for live super‑resolution—components likely to merge with APT2 in future iterations. Meanwhile, rival labs are sure to publish counter‑demos optimized for similar latency.

Two questions now dominate investor calls and academic forums alike:

- Can adversarial autoregression scale to 4K and beyond without exploding compute?

- Will real‑time generation birth entirely new content categories—AI sitcoms, perpetual worlds, adaptive ads—or merely shave hours off current pipelines?

The answers will shape not only AI research budgets but also how billions consume video in the streaming decade.

Key Takeaways for Creators and CTOs

- Latency is the new frontier. Quality gains still matter, but interactivity changes the value proposition.

- ByteDance owns the “fast lane.” Until Sora or Veo proves comparable live throughput, APT2 stands alone.

- Infrastructure counts. Real‑time 24 fps on a single H100 implies sustainable cloud costs; that widens the addressable market.

- Expect rapid iteration. APT0 appeared in January, APT1 in March, APT2 in June. APT3 could arrive before year‑end.

For now, Seaweed APT2 signals that the leap from watching AI video to inhabiting it is no longer theoretical. ByteDance just pushed the industry’s Overton window again—and the countdown to fully interactive, endlessly generated worlds has officially begun.

Leave a Reply